I’ll admit up top that the point I’m going to make in this article will likely come across as something that’s painfully obvious. But, unfortunately, I think it’s necessary to address a research interpretation error that seems to be increasingly common. So, what point am I trying to make in this article?

Strength data is less informative about hypertrophy than hypertrophy data is.

That’s it. That’s the point. If you already understand this very basic concept, you can stop reading now. If you had to re-read the statement because it seemed so obvious that you felt like you were missing something, I understand.

But, I felt the need to write this article because the idea that you can make reliable inferences about hypertrophy from strength data is becoming increasingly common. Even worse, the idea that strength data is even better than hypertrophy data for making inferences about hypertrophy is becoming increasingly common.

As is the case with many dubious approaches to research interpretation, the surge in popularity of this (outrageously dumb) idea comes not from the scientific literature itself, but from motivated reasoning and interpersonal conflict on social media.

If you follow “evidence-based fitness” drama, you’re probably aware of a recent series of pre-printed meta-regressions by Pelland and colleagues. If not, a) kudos (for missing the drama, not the paper), and b) I should be able to get you up to speed pretty quickly.

Long story short, there’s a decades-old feud about the impact of training volume on muscle growth. Many of the current-day participants in this feud probably don’t even realize they’re stepping into an argument that’s been raging since (at least) the 1960s. But, each generation of lifters, bodybuilders, and strength nerds has had a contingent of people arguing that higher training volumes (generally) lead to more muscle growth, and another contingent of people arguing that muscle growth is maximized at relatively low training volumes, and that further increases in volume are “junk volume” (additional work yielding no additional results) at best, and a one-way ticket to burnout, injuries, and overtraining at worst.

Until pretty recently, this argument mostly hinged on wishy-washy presumed mechanisms, logical extrapolations, and examples of successful bodybuilders who achieved great success with higher-volume and lower-volume approaches to training. There simply wasn’t much empirical evidence on the topic. There were very few studies investigating the impact of training volume on muscle growth, and even fewer that employed what most people would consider to be “high volume” training.

We finally started getting some research on the impact of training volume on muscle growth in the mid-90s.

By 2010, there was a grand total of 8 studies on the impact of training volume on muscle growth, but only 3 of them included groups performing more than 10 sets per muscle group, per week.

By 2017, we had 15 studies in total, but only two of them included groups doing at least 20 sets per muscle group per week (the point at which most people would begin to characterize training as “high volume”).

By 2022, we finally had enough high-volume studies (7) to warrant their own meta-analysis.

Finally, in 2024, we got Pelland’s pre-printed meta-regression with 35 total studies on training volume, containing a total of 220 individual effects, including 40 individual effect estimates observed when training with at least 20 sets per week.

Long story short: Things are looking pretty good for training volume.

Now, I don’t intend for this article to be an in-depth discussion of the impact of training volume on muscle growth, or even an in-depth discussion of the Pelland meta-regressions. Rather, I just wanted to set the scene for the most recent battle in the volume wars. After three decades of research, we now have a pretty large body of empirical evidence suggesting that higher training volumes do tend to cause more muscle growth. That put the low-volume camp on the defensive.

When you’re engaged in a scientific discussion, and the weight of the strongest and most direct empirical evidence is overwhelmingly on the side of your opponents, you have approximately three options:

- Admit you were wrong. This is obviously no good. It’s bad for the ego, and may be bad for business if you’ve built an audience by promoting ideas that now appear to be empirically falsified. (Note: this is generally what you should actually do.)

- Retreat back to more indirect forms of evidence. This is actually no good (at least in isolation). Trying to counter a large body of direct longitudinal evidence exclusively with wishy-washy presumed mechanisms and logical extrapolations is the equivalent of bringing a knife to a gun fight.

- Attempt to impugn the veracity, quality, and reliability of all of that pesky empirical evidence.

If you guessed that most of the low-volume combatants sashayed right through door number 3, you’d be correct. This is a time-honored strategy pioneered by big tobacco, and carried forward by all sorts of scoundrels – climate change denialists, COVID denialists, and anti-vax cranks, just to name a few. No matter the strength of the evidence arrayed against you, if you can hold up one or two studies that appear to support your position, and come up with at least a few (more is better) reasonable-sounding arguments to make people question all of the pesky studies you don’t like, you can win a depressingly large number of people over to your way of thinking and stay in the fight.

Again, I don’t intend for this to be an in-depth discussion of training volume, or a full blow-by-blow accounting of the kerfuffle caused by the Pelland meta-regressions. So, we’re not going to dissect the time course of muscle edema, the strength of the repeated bout effect, the impact of rest interval duration, whether people are actually training to failure in these studies, or any of the other active fronts in the volume wars. Rather, I simply wanted to describe the milieu from which emerged the absolute turd of an idea that led to this article.

One of the arguments put forth by the low-volume camp was: In the very same pre-print that reported further increases in muscle growth when increasing training volume beyond 20 sets per week, it’s very curious that strength gains plateaued when training with more than 5 sets per week. Since muscle growth must be contributing to the strength gains that occurred, it’s simply impossible that strength gains would plateau at such a low training volume, but muscle growth would keep increasing at significantly higher training volumes. Therefore, actual muscle growth must have plateaued at much lower training volumes, and the appearance of further increases in muscle growth must be attributable to … something else (progressive increases in muscle edema, a mirage solely attributable to studies with short rest intervals, intimations of fraud or shady meddling from the researchers, etc.).

In other words: We can make more reliable inferences about hypertrophy from strength data than we can from actual hypertrophy data. The strength data appears to conflict with the hypertrophy data, and the strength data should win out.

I fully intended to just ignore this idea because, while it is a very stupid idea, it also appeared to be an idea that was contained to one very stupid argument. That’s not a terribly uncommon dynamic. After all, loads of people allowed themselves to be fooled by the smokescreens deployed by big tobacco in order to obscure the link between cigarettes and lung cancer (because they wanted to keep smoking), but they were totally willing to accept very similar epidemiological evidence that was used to establish the risks associated with other products or substances. In much the same way, I assumed that people were willing to accept a very dumb idea in this one specific domain that has engendered so much fervor in the lifting community, but surely they wouldn’t apply the same very dumb idea to other domains with lower emotional investment … right?

However, much to my chagrin, I was catching up on popular threads in the Stronger By Science subreddit, and my stomach dropped. Someone was putting forth the same argument on a different topic. One meta-regression analyzed the effect of protein intake on hypertrophy, and another analyzed the effect of protein intake on strength gains; the commenter contends that since protein intake beyond 1.5g/kg failed to further increase strength gains, it’s highly unlikely that protein intakes beyond 1.5g/kg were able to increase hypertrophy, despite the fact that the actual hypertrophy meta-regression reported additional hypertrophy at higher protein intakes. Not only that, but it was extremely well-received, and currently sits as one of the top 50 most-upvoted comments in the history of the sub. I then asked around a bit, and other folks noted that they’d also seen this style of argument cropping up more and more of late. Unfortunately, this idea seems to have broken containment.

So, that’s why I’m writing this article. I didn’t kill this very dumb idea in its cradle, but I can try to snuff it out before it metastasizes further.

Now, turning to the matter at hand: Why is this such a dumb idea?

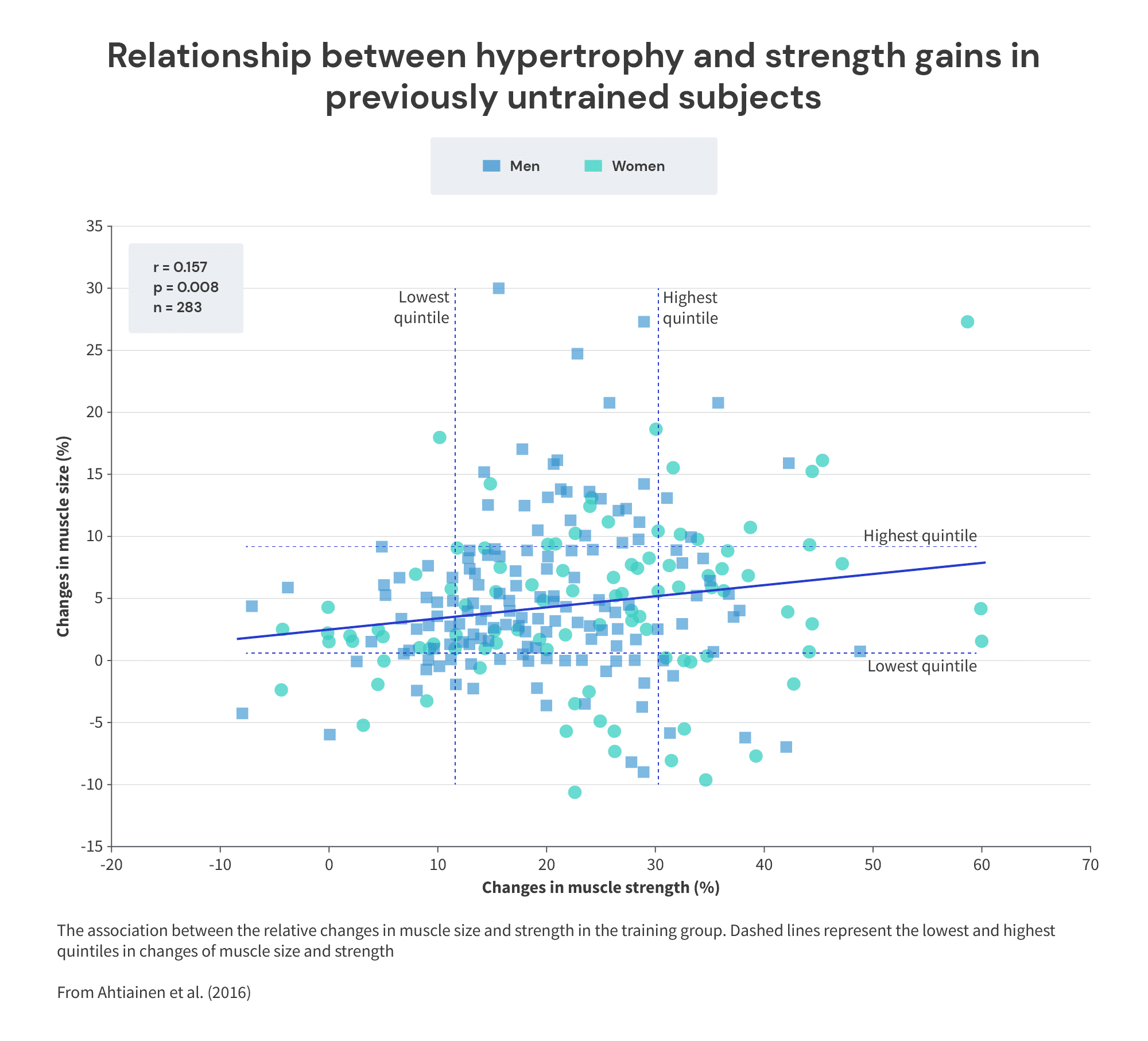

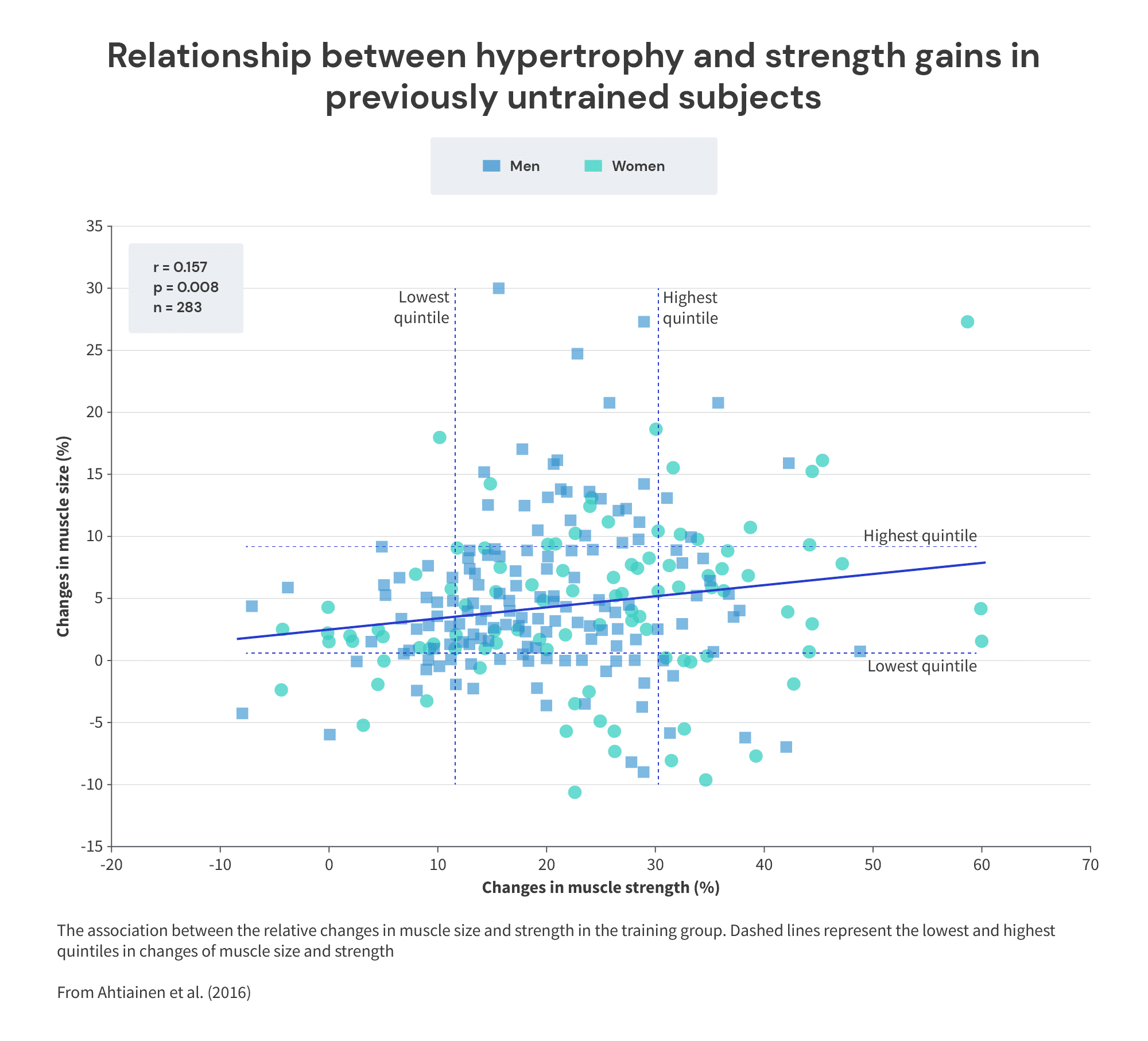

First, for most of the subjects that participate in most of the studies in the published literature, we should expect there to be a pretty weak relationship between muscle growth and strength gains, even without tweaking training variables (like volume) or introducing differing experimental manipulations (like adjusting protein intakes). As discussed in a previous Stronger By Science article, a lot of factors beyond muscle size contribute to strength, and a lot of factors beyond hypertrophy contribute to strength gains. As a result, the correlation between muscle growth (or gains in fat-free mass) and strength gains tends to be of weak-to-moderate strength: r ≅ 0.2 for untrained lifters, and r ≅ 0.6 for trained lifters. In other words, variation in muscle growth only explains ~5-35% of the variation in strength gains in most populations, most of the time, even when everyone completes the same training program. There can be a stronger relationship in specialized populations (powerlifters, for instance), but it’s typically a correlation of at best moderate strength, even when everyone is training with the same volume, intensity, frequency, etc. Once you start manipulating training variables, the associations should be even weaker.

Second, it’s quite obvious that most of the strength gains observed in most studies are not primarily due to muscle growth. If you scroll back up to the figures from the Pelland meta-regression, you can see that most of the hypertrophy studies observed increases in muscle size within a range of about 0-10%. For strength, most of the studies observed increases in a range of about 0-30%. The average relative strength increase was around three times larger than the average relative increase in muscle size. Or, for a more systematic treatment, the typical effect size for hypertrophy observed in the literature is 0.34, and the typical effect size for strength gains is 0.87. The relative difference in exponentiated response ratios (which are unaffected by baseline standard deviations) is even larger: 5.13% for hypertrophy, versus 22.14% for strength.

In other words, even if you assumed that muscle growth directly, linearly, causally increases strength in a direct and deterministic 1:1 manner (not a great assumption, for the record), only about 1/4th-1/3rd of the strength gains observed in the research (on average) could be attributed to muscle growth. Or, stated in the opposite direction, most of the strength gains we observe in most studies are not primarily attributable to muscle growth. Subjects simply improving their general motor skills with the exercises used to assess strength, subjects increasing their specific skill of performing 1RMs, and (I suspect) connective tissue adaptations that allow for more efficient intramuscular force transfer play a much larger role.

Simply put, even with the most optimistic set of assumptions, we shouldn’t expect most variables to have the same impact (in terms of both overall magnitude and dose-response relationship) on both muscle growth and strength gains because muscle growth is categorically not responsible for most of the strength gains observed in most studies. It would only be reasonable to assume that you should see the same effects if you were to assume that the impact of a particular variable on muscle growth is identical to the impact of that same variable on every other contributor to strength gains (or that the same variable has absolutely no impact on every other contributor to strength gains).

Third, we have quite a few examples of bodies of research that investigate the impact of a particular variable on muscle growth, and that also investigate the impact of that same variable on strength gains. In the vast majority of cases, we don’t observe identical (or even similar) strength and hypertrophy responses to the same variable or training manipulation.

The most well-known example of this divergence between the strength impact and the muscle growth impact of a particular intervention is probably the research on training intensity (high-load versus low-load training). High-load (>60% of 1RM) and low-load (≤60% of 1RM) training performed to failure quite reliably lead to similar muscle growth, but high-load training also reliably leads to larger strength gains. But, while this is the most well-known example of strength and hypertrophy adaptations diverging in response to the same training manipulation, it’s far from the only example.

Within the Pelland meta-regressions, we have another example: training frequency. Higher training frequencies have a much larger impact on strength gains than muscle growth.

Proximity to failure is another great example. Training closer to failure has a reasonably strong positive effect on muscle growth. However, proximity to failure has virtually no effect on strength gains within the published literature.

Of note, many of the same people who argue that strength results tell us more about the impact of training volume on hypertrophy than the hypertrophy results do, are also of the opinion that training close to failure is absolutely crucial for maximizing muscle growth. It’s curious that they don’t apply the same logic when considering the research on proximity to failure. After all, training closer to failure causes more muscle damage and more edema than training further from failure. If edema and muscle swelling are the actual cause of the apparent (but false) beneficial impact of high training volumes for muscle growth, why are they not also the actual cause of the apparent (but false) beneficial impact of training to failure for muscle growth? Since proximity to failure doesn’t impact strength gains, shouldn’t we also conclude that it doesn’t affect muscle growth? (To be clear, the last two sentences were tongue-in-cheek. I do think that high training volumes and training close to failure are both independently beneficial for muscle growth, and that the relationships between hypertrophy and both proximity to failure and volume are true relationships that aren’t primarily explained by or caused by muscle swelling and edema.)

Moving on, periodization research provides us with another great example of the divergence between hypertrophy and strength gains in response to the same training manipulation. Periodized training tends to cause larger strength gains than non-periodized training and furthermore, undulating periodization tends to cause larger strength gains than linear periodization (at least in trained subjects). However, neither periodization (comparing periodized to nonperiodized training) nor periodization style (comparing linear to undulating periodization models) significantly impact muscle growth.

Finally, circling back to nutrition, energy deficits lead to less muscle growth (at best), or even losses in lean mass as energy deficits get larger. However, the same meta-analysis documenting the marked impact of energy deficits on muscle growth failed to find a significant effect of energy deficits on strength gains.

When we zoom out a bit, it becomes clear that divergent muscle and strength responses to the same experimental manipulation are the rule, not the exception. Some things that have a large impact on muscle growth have a much smaller impact (or potentially even no impact) on strength gains (proximity to failure, energy deficits, training volume). Similarly, some things that have a large impact on strength have a much smaller impact (or potentially even no impact) on muscle growth (periodization, training frequency, training intensity). Opposite responses are pretty rare in longitudinal research – I’m struggling to think of long-term experimental manipulations that typically increase strength while decreasing muscle mass, or vice versa. But, it’s extremely common for overall effect magnitudes and dose-response relationships to differ when analyzing the muscle- versus strength-promoting effect of some training (or nutrition) variable or intervention.

Now, if someone was willing to stake out the bold and iconoclastic position that strength adaptations are always a better indicator of hypertrophy than direct measures of hypertrophy are, I might respect them for it. I’d disagree with them, to be sure, but that would at least be novel and internally consistent. However, as it is, the argument that strength data provides a better indication of hypertrophy than hypertrophy data is something I only see deployed when someone is faced with a body of hypertrophy literature that they don’t like. It’s a rejoinder that can look and sound like a sober and reasonable argument until you pause to critically consider it for about 5 seconds. However, since it does have the appearance of a reasonable argument, it can still be effective rhetorically, making people doubt strong evidence in favor of much, much weaker evidence. Now that you’ve read this article, you should be equipped to recognize and dismiss it the next time you encounter it in the wild.

A few very brief notes before signing off:

- I’m in no way saying that proponents of low-volume training are equivalent to big tobacco, COVID denialists, or anti-vax cranks. I’m merely pointing out that (reasonably canny) people tend to rummage around in a common bag of rhetorical tricks when they realize the evidence is against them, but they’re unable or unwilling to change or modify their position.

- I suspect a lot of the people deploying this argument are now doing so in a bit of a “monkey see, monkey do” way. In other words, I suspect they’re repeating it with the assumption that it’s a strong argument because they’ve seen it used by influencers they follow, and they assume those influencers are brighter (or more intellectually honest) than they really are.

- The argument discussed in this article (relying on strength data to make inferences about hypertrophy when you also have a robust body of hypertrophy data) is distinct from simply making weak inferences when faced with a lack of better evidence. For example, if three studies suggest that Thing A increases strength gains, but there are no hypertrophy studies on Thing A, it’s not unreasonable to tentatively assume that Thing A may have a positive effect on hypertrophy as well. But, once there are plenty of hypertrophy studies about Thing A, the hypertrophy results should carry far more weight in whatever hypertrophy-related inferences you make.

- For the record, I do actually think there are still plenty of interesting questions to be asked and discussions to be had related to the impact of volume on muscle growth. But I also don’t find many of the arguments put forth by the current crop of low-volume fans (zealots?) to be particularly interesting or compelling.

- I blocked out the names and username in the screenshot of the comment from the SBS subreddit for a reason. Don’t be weird and bother people.

- Keep the context of this article in mind. I’m just discussing the relationships between hypertrophy and strength changes observed between groups and between studies in the published scientific literature. By no means am I saying that strength changes aren’t a decent indicator of hypertrophy at the level of the individual. In fact, I think that strength gains can be (and often are) a pretty good indicator of hypertrophy in certain contexts. However, those contexts significantly differ from the conditions of most studies.